Corresponding publications:

-

Mario Frank, Ralf Biedert, Eugene Ma, Ivan Martinovic, and Dawn Song

"Touchalytics: On the Applicability of Touchscreen Input as a Behavioral Biometric for Continuous Authentication".

In IEEE Transactions on Information Forensics and Security (Vol. 8, No. 1), pages 136-148, IEEE 2013.

[ abstract | bib | pdf(local) | pdf(arXiv) | doi | paper history ]

-

Mario Frank, Ralf Biedert, Eugene Ma, Ivan Martinovic, and Dawn Song

"Touchalytics: On the Applicability of Touchscreen Input as a Behavioral Biometric for Continuous Authentication"

Winner of the best poster award (1 out of 22) at the Intel SCRUB retreat 2013.

[ poster (pdf) ]

-

Neil Zhenqiang Gong, Mathias Payer, Reza Moazzezi, Mario Frank.

"Forgery-Resistant Touch-based Authentication on Mobile Devices". In ASIACCS '16: 11th ACM Asia Conference on Computer and Communications Security , pages 499-510, ACM 2016 (acc. rate 20.9%)

[ abstract | bib | pdf (local) | pdf (arXiv) | doi ]

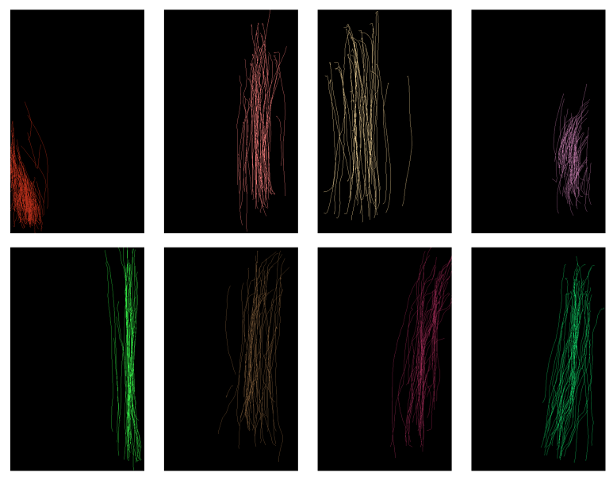

This dataset contains the raw touch data of 41 users interacting with Android smart phones plus a set of 30 extracted features for each touch stroke.

Download:data.zip:

Raw touch data, comma-separated.

The columns are 'phone ID','user ID', 'document ID', 'time[ms]', 'action', 'phone orientation', 'x-coordinate', 'y-coordinate', 'pressure', 'area covered', 'finger orientation'.

The column 'action' can take three values 0: touch down, 1: touch up, 2: move

Please find more detailed information in the readme.

features.mat (matlab format) and features.zip (comma separated):

Extracted features for each touch stroke and descriptive strings for all features. The columns 'user id', 'doc id', and 'phone id' contain labels. Do not use them for testing.

Please find more detailed information in the readme and in our paper.

For Weka users, Marcelo Damasceno converted the dataset to arff format: data_arff.zip. Thanks!

extractFeatures.m:

A MATLAB script to extract features from raw touch data.

If you apply it to (the extracted) data.zip, it will generate features.mat.